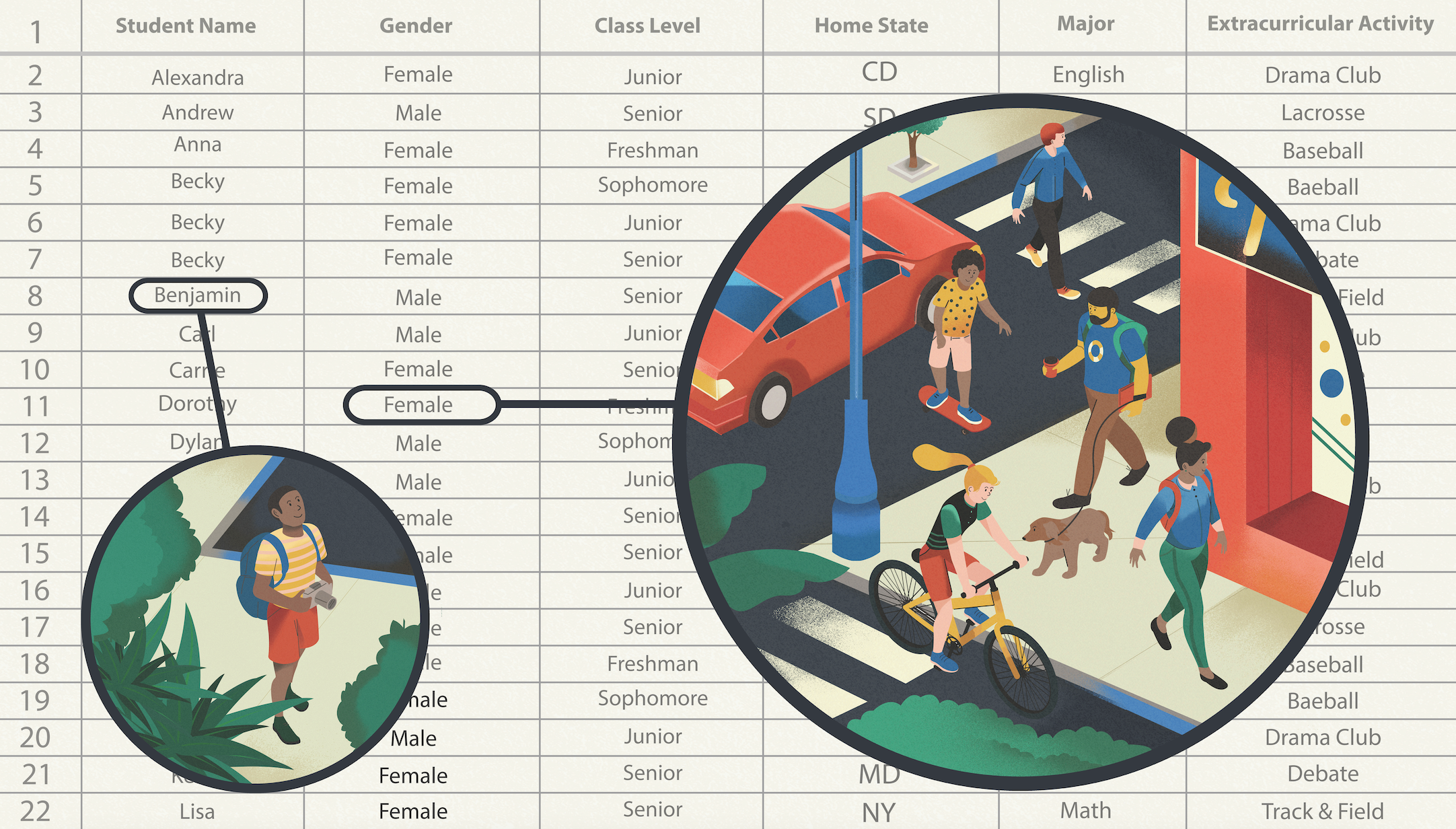

When you’re first learning data science, there’s often a moment when someone pulls the curtain back. Come with me, they say, and learn the truth about what we all do here. Usually, this is your introduction to the nitty-gritty world of messy data and data cleaning. Sometimes this moment will come in the form of a lecture titled “Data Cleaning” or a textbook chapter named “Data Munging.” Sometimes it will be a blog post that introduces you to the wild world of “Data Wrangling.” But all of these terms describe the same idea: You will have to confront errors and gaps in your datasets, and many models can’t be built until you transform datasets into something more uniform. Learning to clean and process this “dirty data”—rendering a dataset into a standardized version of itself, and likely excluding data points that won’t align with our analytics—is a key part of a data scientist’s workflow.

But there are two things missing from many of these lectures, chapters, and blog posts. I believe these two things will make data science more accurate, rigorous, and impactful: a deeper focus on the meaning of dirty data and a greater recognition of data scientists who are willing to get their hands dirty. As data scientists, we need to learn to uncover the meaning of dirty data and why it got that way, and as a field, we need to value the data scientists who specialize in data collection and other components of research design.

Sometimes I like to call this “when we miss missingness,” a quip to remind myself that we need to understand data not just in terms of datasets but in terms of the context around it. We need to ask what data was collected and, perhaps more importantly, what data was left out. To me, this can be a moment for insights as impactful as a complex model, the why along with the what. These are the questions of research design. Understanding the research design behind a dataset is the bookend to the models, the parenthetical that gives a stable foundation to future analyses.

Research design asks questions like: Why were these variables operationalized this way? Are there hidden confounders in this datasets? Are these variables truly independent, or do they dynamically change each other? Does the data at hand accurately represent the question I am trying to ask, and if not, should I modify my question? Are there important threats to the validity of my design, the inferences that I can make about what causes what? I believe that most of us working in data science want to ask these questions, but we often aren’t given enough space, time, and tools to make research design a formal part of our workflow.

Once, I was working on a data science project for an educational game used by students. I noticed that in the datasets we had gathered, there was an area of the game where the data engineering system hadn’t measured students’ activity. This was a “bonus” recreation area of the game, a break from the rest of the exercises that we were measuring. I wondered why this recreation activity didn’t show up in our measures. What hypothesis might the missing measures introduce? I wondered if students who took more frequent breaks in this “bonus” area could end up feeling more motivated and engage more deeply in the educational aspect of the game. I took this question to my collaborators, and we created a new dataset that included the “bonus” area. Sure enough, a new model yielded a finding that no one had expected: Students did benefit from their breaks, and this helped us make product recommendations, which in turn improved the impact that the exercises were having on students’ learning.

Research design can give us the chance to not just filter away missingness but to learn from it. Real datasets as they exist teach us about what was selected and about who gets a voice. We might find that we thought we were measuring one type of variable but we were actually measuring another. We might realize several of our measurements are different versions of the same underlying pattern. Often, missingness in our data is systematic—certain groups of people or certain types of behaviors aren’t represented. Research design lets us ask why.

Research design thinking can also help us truly see the data already at hand. Often in data science projects, I’ve found that no one even looks at my choices during data cleaning. I could throw out data, filter variables, and discard outliers in order to get to a workable dataset that yields the results I want to find. But data cleaning with abandon is dangerous; there’s no free lunch in throwing away data. Sometimes an outlier might not really be an error. If it comes from a group of people who are underrepresented in our dataset, perhaps it provides us with important and true signals about how different the data would look for this group. Assumptions to variables that can be corrected when we notice how differently they are shaped in the real world. Sometimes we might discover a new round of data collection is needed.

And when no one but you sees the choices during data cleaning, it can be difficult to feel valued and recognized, even if you do important work there. We talk a lot about how data cleaning is inevitable, but data science job descriptions rarely list the skills of research design. Data scientists who spend a long time refining better measurements or pointing out the context of other uncollected data often choose long-term impact over short-term findings, and in fast-paced data science organizations, it can be difficult to find the motivation to invest in longitudinal understanding. But as a field, we will do better, more impactful, more ethical work when we value research design along with modeling.

When I was first transitioning into working as a full-time data scientist, I took a data science course that had an early lesson on data cleaning. This course taught only two methods to deal with missing data: either drop rows with missing data entirely, or impute new values to fill those rows. What was never discussed was why you might do one of these things. But the why matters profoundly. Coming into the field as a social scientist who had spent years dealing with how much research design informed interpreting data, I was stunned to see how often data science education skipped over asking why data might be missing. When a dataset is systematically missing certain kinds of people, observations, or events, both dropping observations and imputing values based on systematically different observations can introduce profound biases.

Incomplete research design can be at the root of algorithmic models that reproduce discriminatory bias in health care, justice systems, financial systems, and more. In December 2020, as US healthcare systems were beginning to distribute the COVID-19 vaccines to frontline healthcare workers, the Stanford Medical Center deployed an algorithm to assign prioritization to hospital employees based on data about their relative risk of exposure to COVID-191. The intention behind this algorithmic decision-making was to use data to improve equitable access to vaccines. Instead, it impeded it. Medical residents were frontline workers at high risk for exposure. But because residents rotated between departments and therefore did not have an assigned departmental “location” in the data used for the algorithm, they were erroneously deprioritized. A missing variable led to missing key context. Stanford Medicine withdrew the algorithm and issued a public apology. This was a failure of data-driven decision-making, but it was also a failure of data understanding.

I hope to work toward a different future. In my data science projects now, I carve out the space for research design conversations. I consider these questions as critical as any models, effects, and findings. In my mentorship and teaching, I encourage future data scientists to see their questions and wonderings during the data cleaning stage as explicit skills that they can develop and point them towards tools from social science and other fields to better scope issues like operationalization, causal inference, and how we know what questions we can answer.

When data scientists are allowed to spend this time on the meaning of our data, we become more empowered to collaborate on its design. Doing real-world data science becomes less about racing toward a model and pitting models against each other and more about deeply understanding where and why we collected certain types of data. Of course, not everyone will do every piece of this process. There’s a lot of work to be done with data, and data scientists will always need to collaborate with other domain experts. But the world needs a future data science that asks why and doesn’t miss meaning.

Cat Hicks leads Catharsis Consulting, an end-to-end research consultancy which specializes in applied research projects on complex human behavior for organizations developing ethical and rigorous research & data science capabilities. Cat holds a PhD in quantitative experimental psychology from UC San Diego and has led research at organizations such as Google and Khan Academy. Cat is driven by a deep commitment to work that creates access for underrepresented and marginalized learners and has been an advocate for students with disrupted educational paths for over fifteen years.

1 Chen, C. Only seven of Stanford’s first 5,000 vaccines were designated for medical residents. ProPublica. https://www.propublica.org/article/only-seven-of-stanfords-first-5-000-vaccines-were-designated-for-medical-residents.